Postcode lookup

Table of Contents

During some discussions I started wondering how hard it is to provide a service that can provide a user with a postcode lookup feature that assists with some level of suggestion and autocompletion of the user’s address.

A couple of months ago I was playing around with creating realistic looking data for populating test environments. This effort did not extend to providing address information that I could use to try creating the lookup service.

I decided that generating fake postcodes (invalid postcodes) and related information would not be as useful because lots of things are related to postcode. To provide useful address information at least postcode is needed and it would be a bonus to have some other address information that aligns correctly with postcode such as geolocation, street addresses, and county. The easiest way to have some data would probably be sourcing real data.

Sourcing data #

The requirement for sourced data was that it needed to be freely licenced and my first port of call was the gov.uk websites to see what information I could download directly from government sources.

There was a lot of informaiton on the government website but with a little bit of Googling I fell across https://www.postcodes-uk.com/postcode-areas which has some good data and also https://www.doogal.co.uk/PostcodeDownloads.php

I downloaded one of the zip files from Doogal which 1.2 GB (uncompressed). Taking a look at the first 10 lines of the file the format was easy to understand and showed that there was more data than I required. I knocked up a quick script that would read the headers and number them so that selection of the relevant fields would be easier:

with open("postcodes.csv",'r') as f:

csv_data = csv.reader(f, delimiter=',', quotechar='"')

for e,row in enumerate(csv_data):

if e == 1:

break

for ee,item in enumerate(row):

print(str(ee),item)

After a some trial and error with the fields, the most recognisable combination of address data were fields 0,7,8,12,17,21,31 which translated to postcode,county,district,country,parish,area1,area2 (or near enough).

Some of the fields were empty depending on the record but this combination usually provided the correct information across all these fields.

To process the CSV I iterated through each line, read out the wanted data and output that as JSON using the following:

with open("postcodes.csv",'r') as f:

csv_data = csv.reader(f, delimiter=',', quotechar='"')

overall_array = []

for e,row in enumerate(csv_data):

if e == -1: # was set to 10 to limit, -1 for unlimited

break

#print(row[0],"|",row[7],"|",row[8],"|",row[12],"|",row[17],"|",row[21],"|",row[31])

colnums = "0,7,8,12,17,21,31"

headers = "postcode,county,district,country,parish,area1,area2"

dictreturn = {}

for cn, col in enumerate(colnums.split(",")):

dictreturn[headers.split(",")[cn]] = row[int(col)]

overall_array.append(dictreturn)

with open("py_clean.json",'w') as ff:

ff.write(json.dumps(overall_array))

which resulted in each line being output as in the example below:

{"postcode": "AB1 0AA", "county": "", "district": "Aberdeen City", "country": "Scotland", "parish": "", "area1": "", "area2": "Cults, Bieldside and Milltimber West"}

Once the whole was processed the size was 490MB.

Reading the processed data #

Before trying to build a web service using the data I wrote a little script that allowed me to read in the new file and search through the data based on postcode.

The script reads the file into memory and presents a prompt for the postcode:

Once entered, the script looks through the data for a number of postcodes that start with the value entered before prompting for another postcode. This allows for partial postcodes to be entered and for “suggestions” to be made.

[[screenshot]]

Creating this script helped surface some problems. Firstly, reading the file into memory was taking a fair amount of time, clocking in at 8 seconds. Secondly, the amount of RAM taken up was in the order of 2.7GB.

Fixing the processed data #

To try and address both issues I changed my cleaning script to output in CSV format instead of JSON, which resulted in the clean file being … and loading into my test search script in …. seconds.

Creating the web service #

Using flask I created a quick API endpoint that a web form could submit some data to, that would effective be the same as my CLI script to search the clean data, and then return the suggestions.

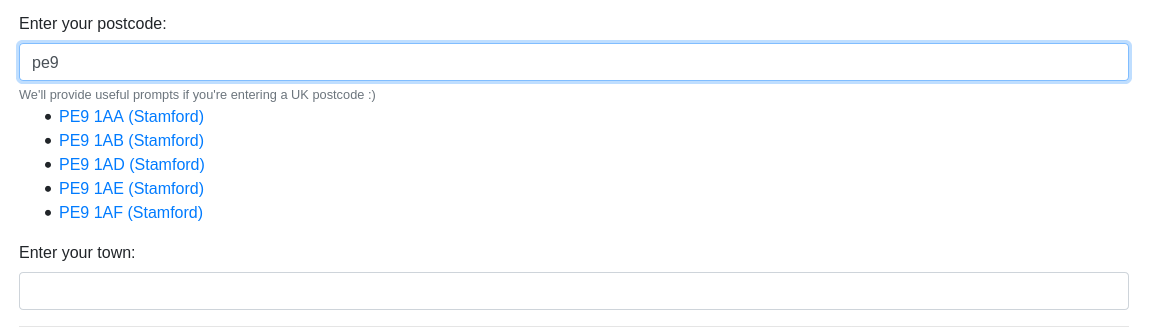

Making the API request https://test-env/api/postcode/pe9 returns:

[{"a":"PE9 1AA","d":"Stamford"},{"a":"PE9 1AB","d":"Stamford"},{"a":"PE9 1AD","d":"Stamford"},{"a":"PE9 1AE","d":"Stamford"},{"a":"PE9 1AF","d":"Stamford"}]

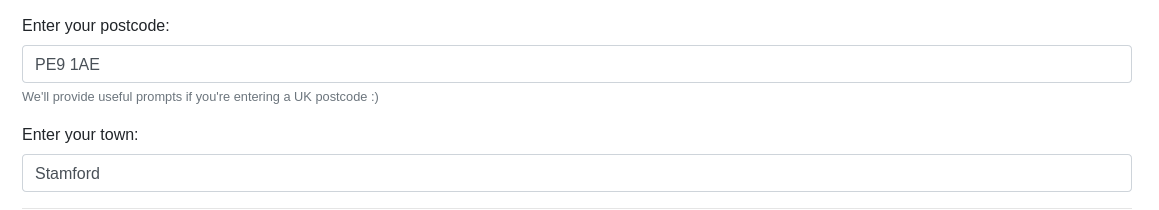

Using Vue.js I had the response add the suggestions underneath the search box on the web form. Each entry was clickable and entered the county data into the subsequent county input field.

The empty form looked liked:

and after some user input starts suggesting:

and after selecting a suggestion:

Not quite as good as the Royal Mail postcode finder…. but good enough. Now I’ve got postcode and corresponding area data that I can use as sources for generating data, and I’ve seen that it’s not too hard to create the service as I orginally intended.

Issues #

Testing on mobile devices revealed an issue where mobile devices don’t seem to tigger the keyup event unless a space is pressed which is slightly limiting.

Front end logic:

- On clicking a returned value, the “d” value - will be placed in the town input field

- The user input value will be sent on each key press, so that the API results can be rendered while the user types

API logic:

- a minimum amount of characters before the API returns anything (default: 5)

- logic to remove spaces from the start, and any between characters

- and also to lower case everything for the comparison