Creating a basic sheep dip with AWS Lambda and S3

Table of Contents

I’ve been spending some time creating a very simple Sheep Dip using S3 and the VirusTotal API. By uploading a file to an S3 bucket I can get Lambda to trigger and generate a SHA256 hash for the file and add that to the file’s metadata. I can also check the hash against VirusTotal to see if it’s a known bad file.

Files are manually uploaded through the AWS console or any other means of getting a file to a non-public bucket.

Having spent some time moving files around and setting up the functions, the most important parts are documented here.

Setup #

I started off by creating a new IAM role for Lambda to use for reading and writing to S3. The name for the role was sheep-dip that was given the full S3 permissions and also permissions to log to CloudWatch too.

I created a private bucket called upload-s3.test.chrisw.io

I uploaded two cat pictures to the S3 bucket for developing and testing against. On my local machine I checked the SHA256 hashes for the files, to compare against later.

sha256sum cat*.jpg

71fb1de9c50a9bf53a9532d42540caf8ec41165bb967d8d1c683cf6a30b193b9 cat1.jpg

38ccda148770190cacb9a6172b9bae18924da2f0f54af388eed0e57588681d98 cat2.jpg

Creating the Lambda function #

I created a new Lambda function and assigned it the sheep-dip role.

I wanted to first test that Lambda could use the role to list all of items in my bucket, ensuring my IAM role and S3 configuration were in order. Using Boto3 and some code samples from the documentation I ran the following code:

import json

import boto3

def lambda_handler(event, context):

s3 = boto3.resource('s3')

bucket = s3.Bucket('upload-s3.test.chrisw.io')

for obj in bucket.objects.all():

key = obj.key

print(key)

return

which resulted in:

Response:

null

Request ID:

"32aa86e4-168b-4060-b585-4897f649d9d5"

Function logs:

START RequestId: 32aa86e4-168b-4060-b585-4897f649d9d5 Version: $LATEST

cat1.jpg

cat2.jpg

END RequestId: 32aa86e4-168b-4060-b585-4897f649d9d5

REPORT RequestId: 32aa86e4-168b-4060-b585-4897f649d9d5 Duration: 263.97 ms Billed Duration: 300 ms Memory Size: 128 MB Max Memory Used: 79 MB

The cat pics are listed with no errors, showing that the role is working correctly.

To prove that I could read the contents of the files, I produced the following code which uses hashlib to create a hash based on the contents of the file. Running the following code:

import json

import boto3

import hashlib

def lambda_handler(event, context):

s3 = boto3.resource('s3')

bucket = s3.Bucket('upload-s3.test.chrisw.io')

for obj in bucket.objects.all():

key = obj.key

file_hash = hashlib.sha256()

file_hash.update(obj.get()["Body"].read())

print(file_hash.hexdigest())

return

resulted in:

Response:

null

Request ID:

"e79b0719-0165-4320-916c-dab5c302cae5"

Function logs:

START RequestId: e79b0719-0165-4320-916c-dab5c302cae5 Version: $LATEST

71fb1de9c50a9bf53a9532d42540caf8ec41165bb967d8d1c683cf6a30b193b9

38ccda148770190cacb9a6172b9bae18924da2f0f54af388eed0e57588681d98

END RequestId: e79b0719-0165-4320-916c-dab5c302cae5

REPORT RequestId: e79b0719-0165-4320-916c-dab5c302cae5 Duration: 1987.40 ms Billed Duration: 2000 ms Memory Size: 128 MB Max Memory Used: 79 MB Init Duration: 258.04 ms

The two hashes output match the ones that I produced earlier on my local machine. To get the metadata updated for each object I decided to test the following code:

import json

import boto3

import hashlib

def lambda_handler(event, context):

s3 = boto3.resource('s3')

bucket = s3.Bucket('upload-s3.test.chrisw.io')

for obj in bucket.objects.all():

key = obj.key

file_hash = hashlib.sha256()

file_hash.update(obj.get()["Body"].read())

digest = file_hash.hexdigest()

print(digest)

obj.put(Metadata={"sha256_digest":digest})

return

This worked but running the Lambda function multiple times resulted in an AWS API error because the metadata was being updated too frequently on each object.

To save performing unnecessary tasks the code was modified to check whether the hash was in the metadata already, and only when it wasn’t, the function would read the object, compute the hash, and update the object metadata.

This logic looked as below:

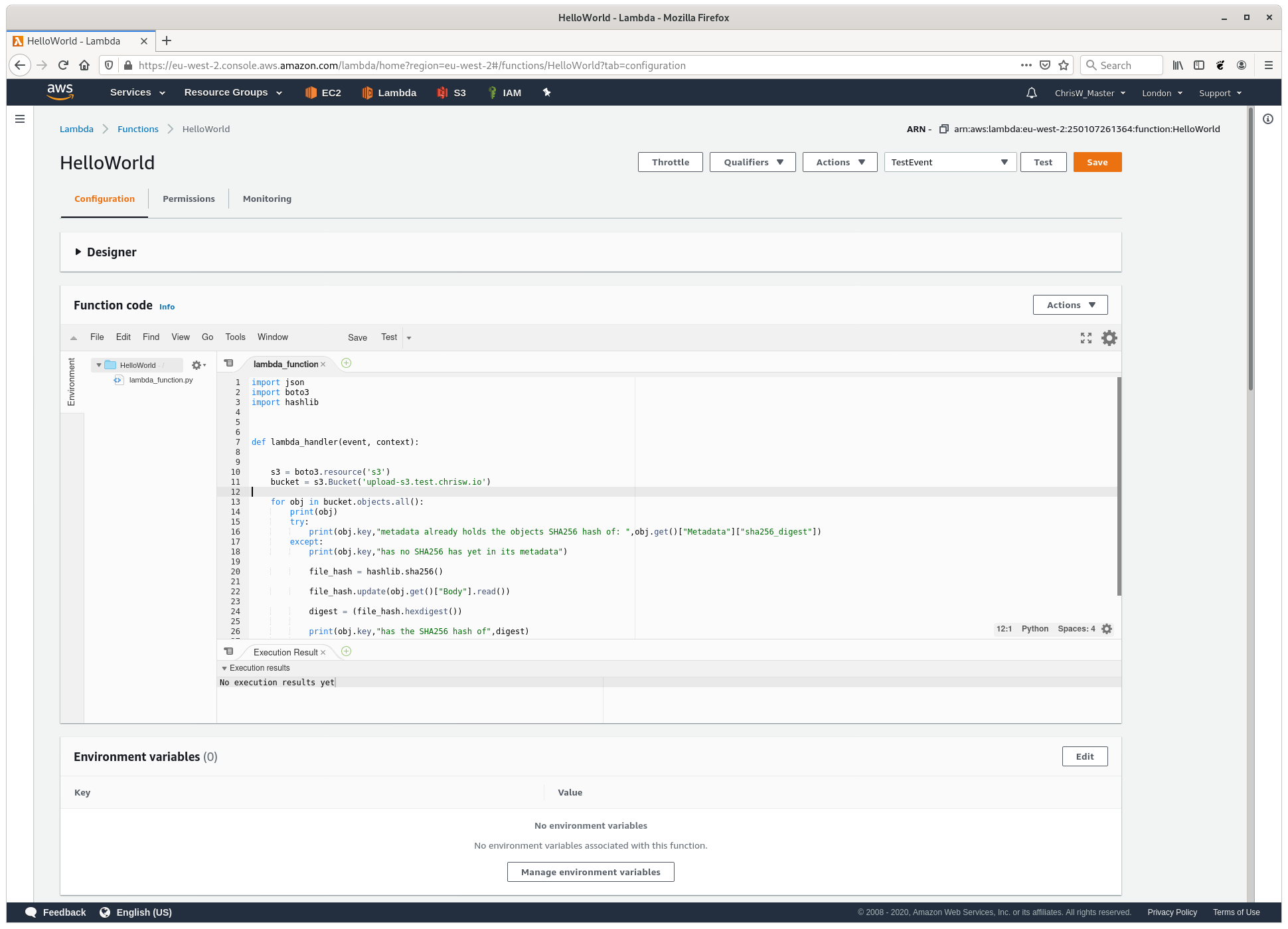

import json

import boto3

import hashlib

def lambda_handler(event, context):

s3 = boto3.resource('s3')

bucket = s3.Bucket('upload-s3.test.chrisw.io')

for obj in bucket.objects.all():

print(obj)

try:

print(obj.key,"metadata already holds the objects SHA256 hash of: ",obj.get()["Metadata"]["sha256_digest"])

except:

print(obj.key,"has no SHA256 has yet in its metadata")

file_hash = hashlib.sha256()

file_hash.update(obj.get()["Body"].read())

digest = (file_hash.hexdigest())

print(obj.key,"has the SHA256 has of",digest)

obj.put(Metadata={"sha256_digest":digest})

return

On first run with new contents in the S3 bucket:

Response:

null

Request ID:

"24b8336b-7d77-4572-8ea3-c1f1080ea85f"

Function logs:

START RequestId: 24b8336b-7d77-4572-8ea3-c1f1080ea85f Version: $LATEST

s3.ObjectSummary(bucket_name='upload-s3.test.chrisw.io', key='cat1.jpg')

cat1.jpg has no SHA256 has yet in its metadata

cat1.jpg has the SHA256 has of 71fb1de9c50a9bf53a9532d42540caf8ec41165bb967d8d1c683cf6a30b193b9

s3.ObjectSummary(bucket_name='upload-s3.test.chrisw.io', key='cat2.jpg')

cat2.jpg metadata already holds the objects SHA256 hash of: 38ccda148770190cacb9a6172b9bae18924da2f0f54af388eed0e57588681d98

END RequestId: 24b8336b-7d77-4572-8ea3-c1f1080ea85f

REPORT RequestId: 24b8336b-7d77-4572-8ea3-c1f1080ea85f Duration: 642.78 ms Billed Duration: 700 ms Memory Size: 128 MB Max Memory Used: 82 MB

and on the second run:

Response:

null

Request ID:

"96bf2b50-a884-4cb5-9d57-dda9d19dd4b8"

Function logs:

START RequestId: 96bf2b50-a884-4cb5-9d57-dda9d19dd4b8 Version: $LATEST

s3.ObjectSummary(bucket_name='upload-s3.test.chrisw.io', key='cat1.jpg')

cat1.jpg metadata already holds the objects SHA256 hash of: 71fb1de9c50a9bf53a9532d42540caf8ec41165bb967d8d1c683cf6a30b193b9

s3.ObjectSummary(bucket_name='upload-s3.test.chrisw.io', key='cat2.jpg')

cat2.jpg metadata already holds the objects SHA256 hash of: 38ccda148770190cacb9a6172b9bae18924da2f0f54af388eed0e57588681d98

END RequestId: 96bf2b50-a884-4cb5-9d57-dda9d19dd4b8

REPORT RequestId: 96bf2b50-a884-4cb5-9d57-dda9d19dd4b8 Duration: 506.62 ms Billed Duration: 600 ms Memory Size: 128 MB Max Memory Used: 80 MB

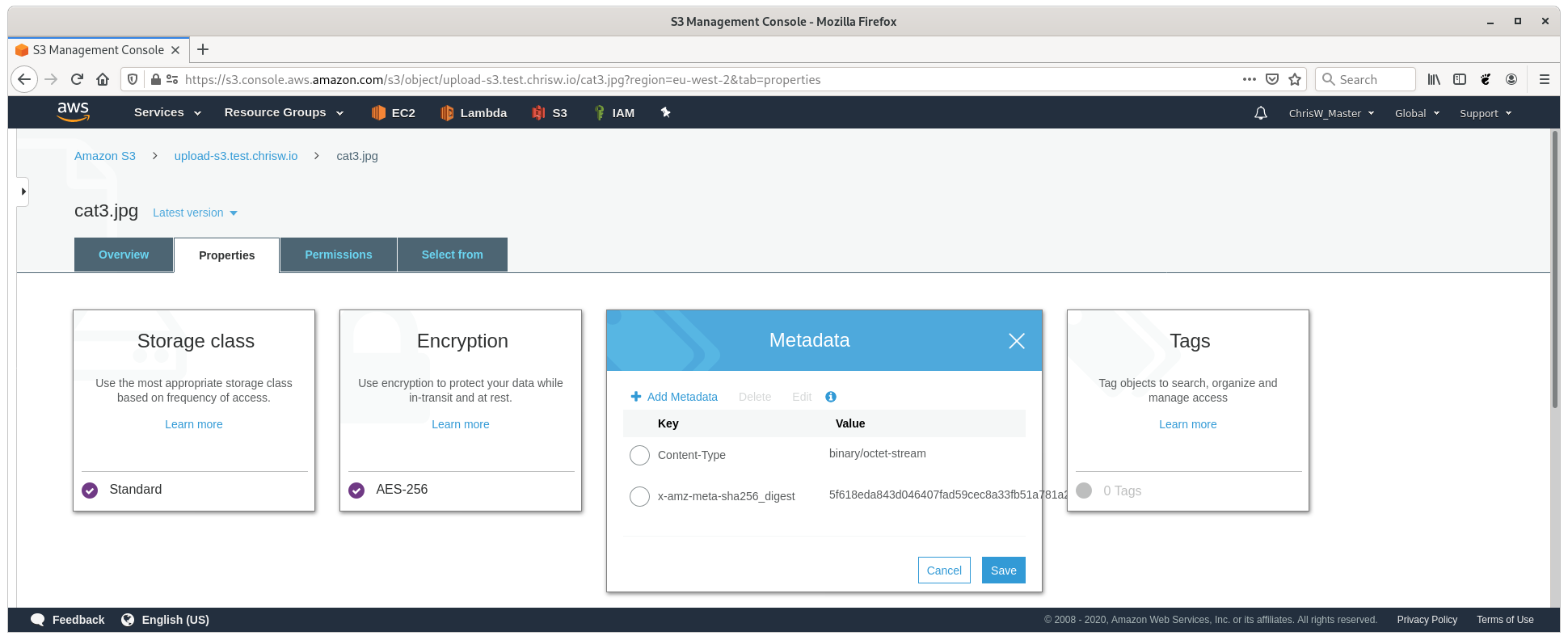

So, the code works when called manually and runs over each item in the bucket. To check with a new file, I uploaded cat3.jpg to the S3 bucket and ran the Lambda function again. After about a second, the metadata on the newly uploaded file was updated as shown in the screenshot below:

To check whether the value was correct I hashed the file locally with the following command:

sha256sum cat3.jpg

which resulted in:

5f618eda843d046407fad59cec8a33fb51a781a2ae12dabe9158ef1c943676db cat3.jpg

So, the values match, the Lambda function works. However, the Lambda function still needs to be run manually and that’s not the aim. The aim is to detect a new file and update the files metadata with it’s hash without iterating through all items in the bucket or any manual intervention.

Back in the AWS console I created a new Lambda function and used the designer to define a S3 trigger on any create in my bucket.

After taking a look at the docs at https://docs.aws.amazon.com/lambda/latest/dg/with-s3-example-deployment-pkg.html I updated the code to the following:

import json

import boto3

import hashlib

def lambda_handler(event, context):

for record in event['Records']:

s3 = boto3.resource('s3')

s3_bucket = record['s3']['bucket']['name']

s3_obj = record['s3']['object']['key']

obj = s3.Object(bucket_name=s3_bucket, key=s3_obj)

print(obj)

try:

print(obj.key,"metadata already holds the objects SHA256 hash of: ",obj.get()["Metadata"]["sha256_digest"])

except:

print(obj.key,"has no SHA256 has yet in its metadata")

file_hash = hashlib.sha256()

file_hash.update(obj.get()["Body"].read())

digest = (file_hash.hexdigest())

print(obj.key,"has the SHA256 has of",digest)

obj.put(Metadata={"sha256_digest":digest})

return

Using the Lambda test functionality I ran the Lamdbda function with a sample S3 bucket update trigger. This resulted in the following error, due to the bucket and object defined in the test data not actually existing:

Response:

{

"errorMessage": "An error occurred (AccessDenied) when calling the GetObject operation: Access Denied",

"errorType": "ClientError",

"stackTrace": [

" File \"/var/task/lambda_function.py\", line 44, in lambda_handler\n file_hash.update(obj.get()[\"Body\"].read())\n",

" File \"/var/runtime/boto3/resources/factory.py\", line 520, in do_action\n response = action(self, *args, **kwargs)\n",

" File \"/var/runtime/boto3/resources/action.py\", line 83, in __call__\n response = getattr(parent.meta.client, operation_name)(*args, **params)\n",

" File \"/var/runtime/botocore/client.py\", line 316, in _api_call\n return self._make_api_call(operation_name, kwargs)\n",

" File \"/var/runtime/botocore/client.py\", line 635, in _make_api_call\n raise error_class(parsed_response, operation_name)\n"

]

}

Request ID:

"5aeed2b1-418c-477a-8429-6531316b1b92"

Function logs:

START RequestId: 5aeed2b1-418c-477a-8429-6531316b1b92 Version: $LATEST

s3.Object(bucket_name='example-bucket', key='test/key')

test/key has no SHA256 has yet in its metadata

[ERROR] ClientError: An error occurred (AccessDenied) when calling the GetObject operation: Access Denied

Traceback (most recent call last):

File "/var/task/lambda_function.py", line 44, in lambda_handler

file_hash.update(obj.get()["Body"].read())

File "/var/runtime/boto3/resources/factory.py", line 520, in do_action

response = action(self, *args, **kwargs)

File "/var/runtime/boto3/resources/action.py", line 83, in __call__

response = getattr(parent.meta.client, operation_name)(*args, **params)

File "/var/runtime/botocore/client.py", line 316, in _api_call

return self._make_api_call(operation_name, kwargs)

File "/var/runtime/botocore/client.py", line 635, in _make_api_call

raise error_class(parsed_response, operation_name)END RequestId: 5aeed2b1-418c-477a-8429-6531316b1b92

REPORT RequestId: 5aeed2b1-418c-477a-8429-6531316b1b92 Duration: 2102.87 ms Billed Duration: 2200 ms Memory Size: 128 MB Max Memory Used: 80 MB Init Duration: 249.26 ms

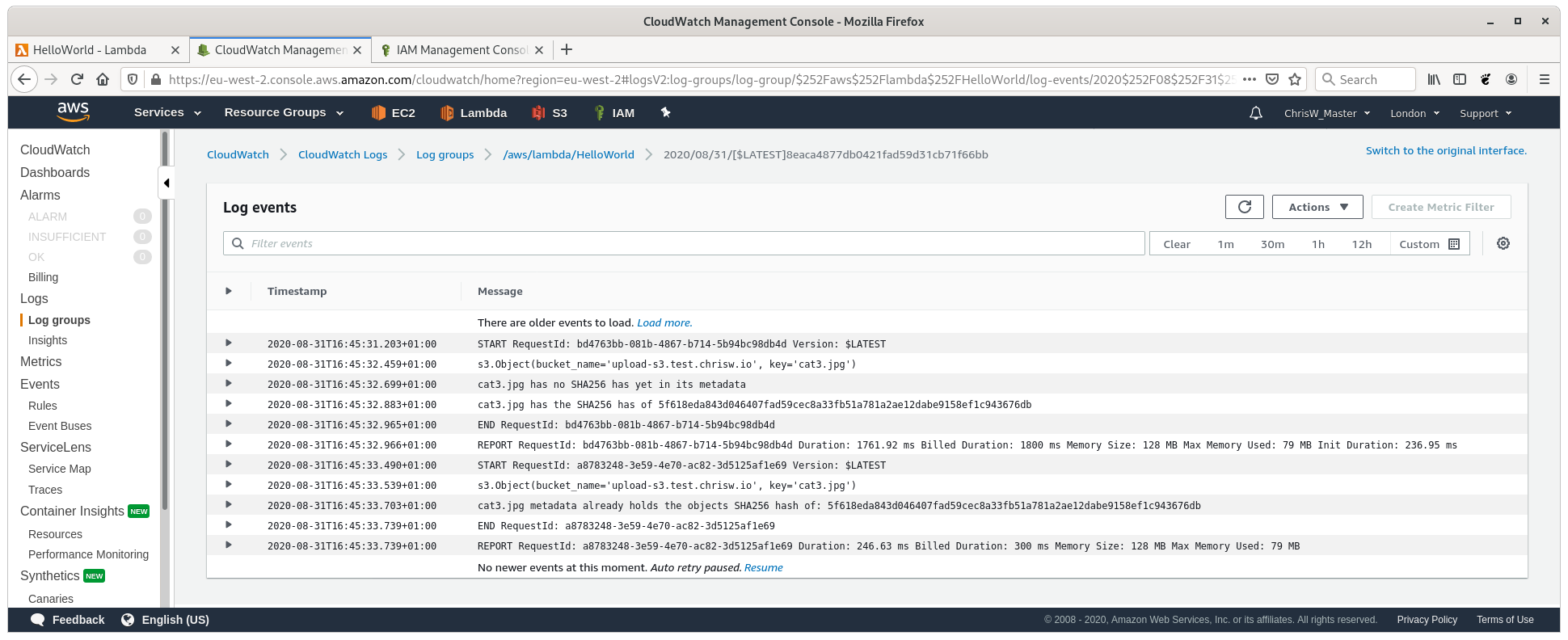

Running the test did prove that the correct bucket and key were being read by the code. To complete a real test I deleted cat3.jpg and uploaded it again. After a few seconds I checked the metadata and the hash was present and the CloudWatch log output the expected log lines as below:

Here’s a screenshot of the final code in the Lambda code IDE: